Week 2. Atlas of Weak Signals.

This second week we had the Atlas of Weak Signals course, which analyzes emerging issues that can be assessed and in which way they can be approached. This included a trip to Collserolla.

Read More

December 1, 2021

It's crazy to think how far we've come with technological breakthroughs that we can teach a computer how to do tasks in such a short amount of time. This is definitely making our lives easier but it's also pushing us to think more deeply about the responsibility of our choices and the things we design but also what we consume. One of the excercises we did was with Google Colab. Taller Estampa shared with us a file in which we uploaded a picture and it identified elements in it. Like buses, people, bowls, tables, chairs, handbags and all sorts of things.

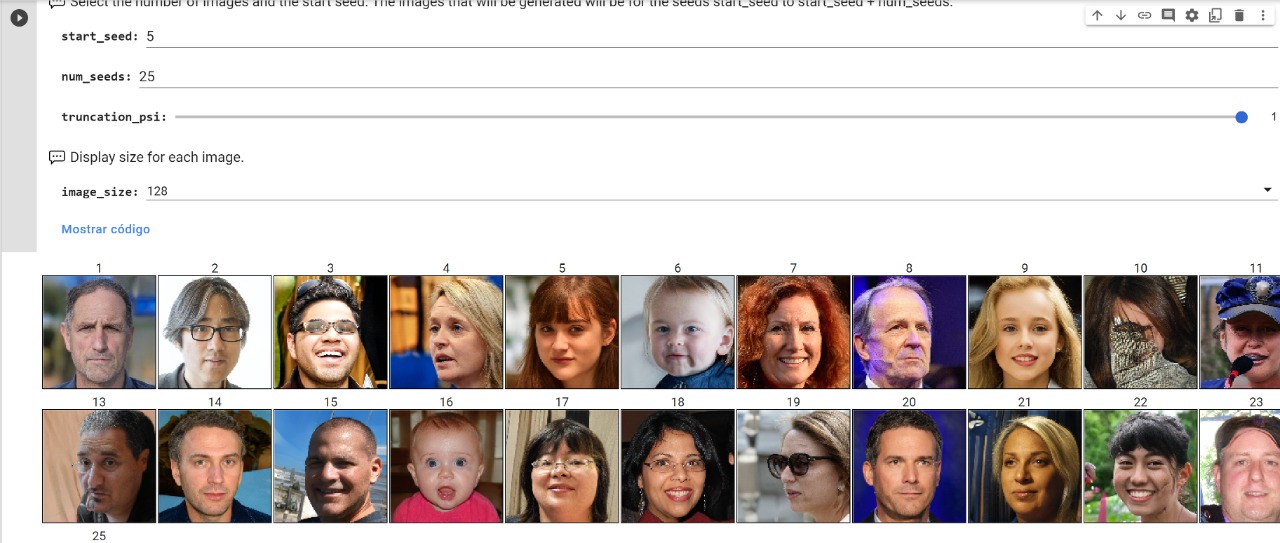

With another document we explored how some images could be generated with machine learning. We explored different "seeds" which is a point in the latent space (refering to an image) and made some remixes between them.

This was done with a truncation of 1, meaning it grabs the more "normal" (whatever that means) parameters for the tweaking of the pictures. The next one was done with a truncation of 2. Prepare for the outcome, spoiler alert: it's super creepy.

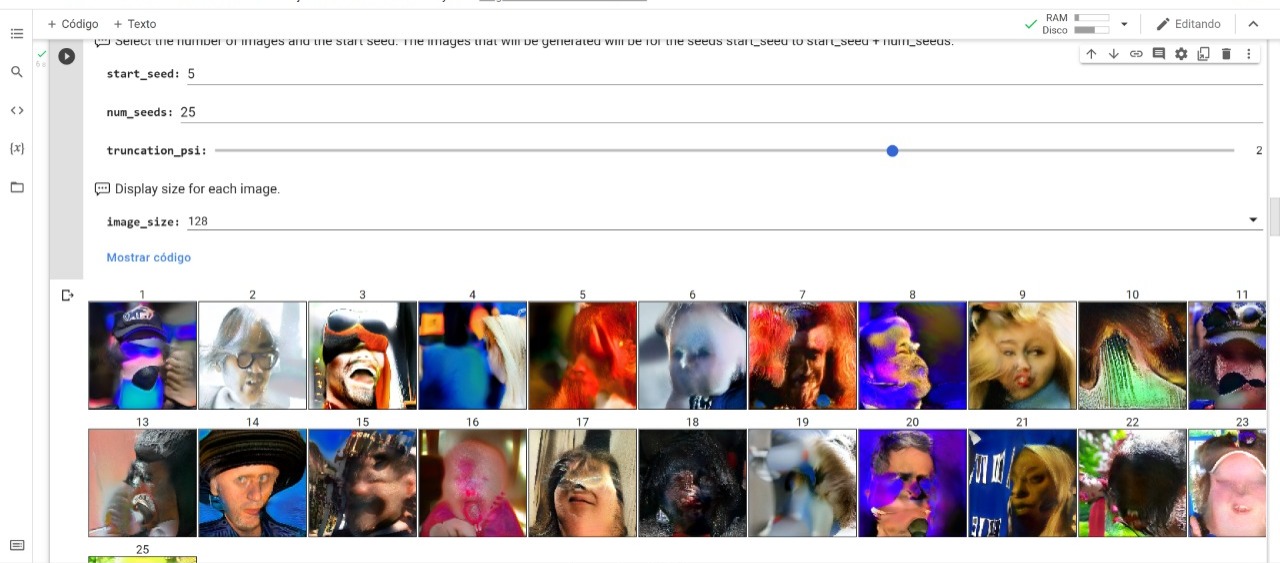

You can see here how the pictures were mixed and as they had other elements in the picture like hats, microphones, glasses, etc. it just makes a weird combination of it as an output. The last one I did is with a truncation of 3. This last outcome became more abstract rather than defined.

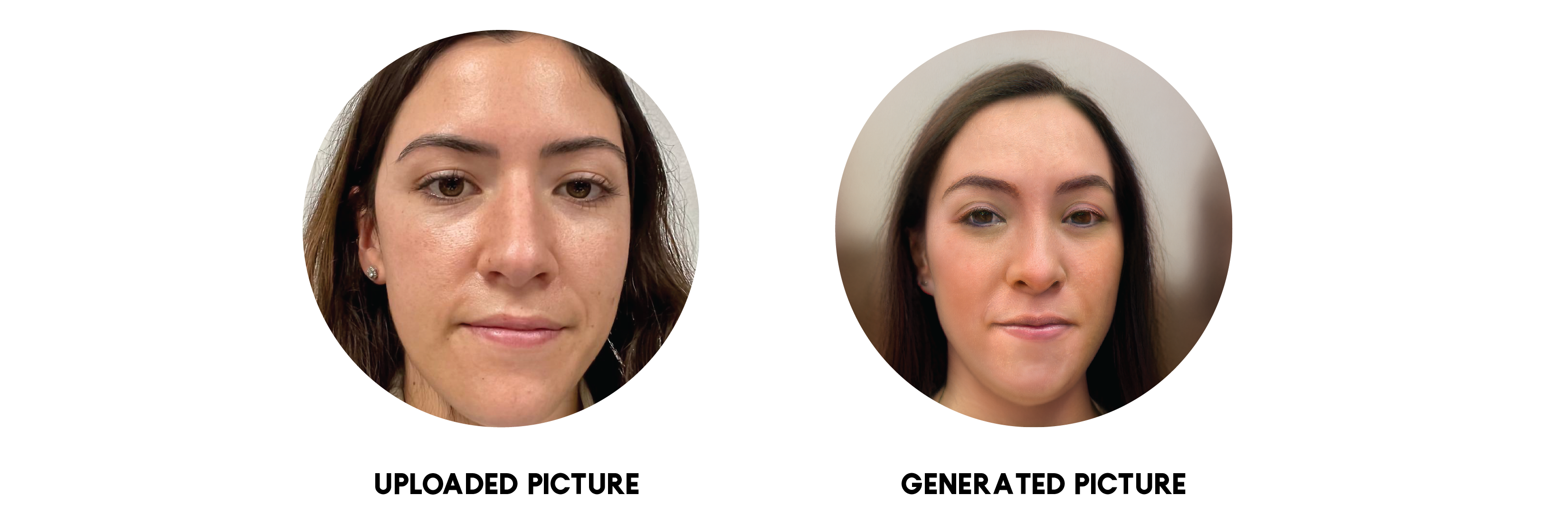

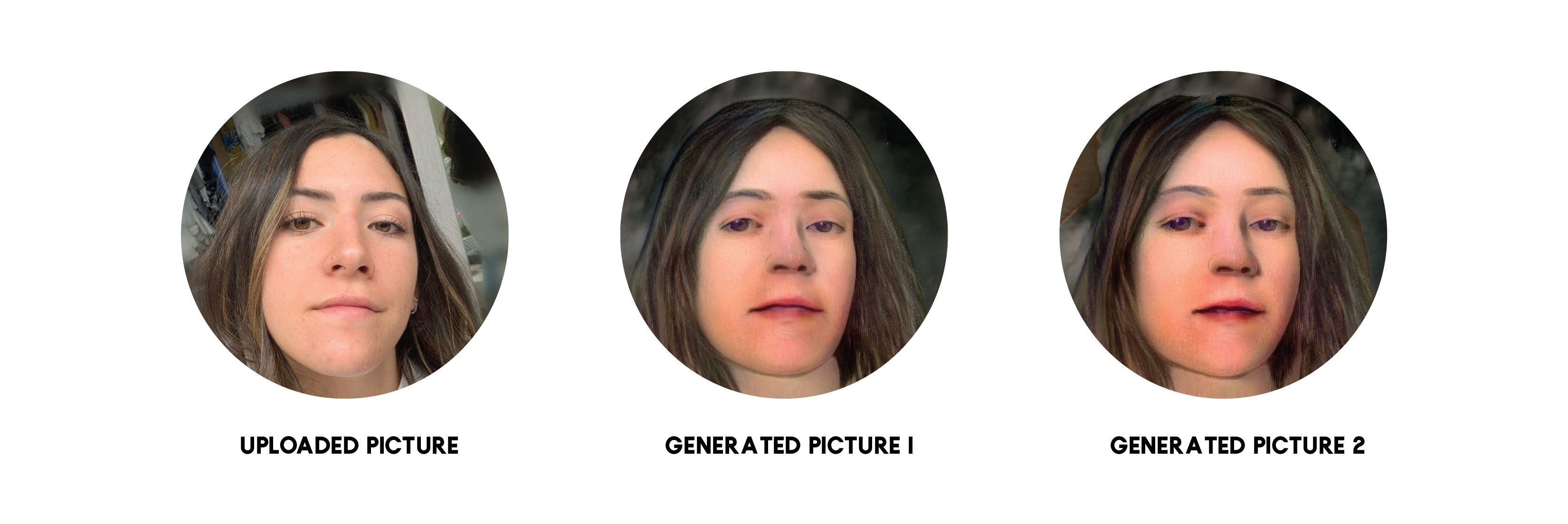

The dataset used is from Flickr-Faces-HQ and it consists of 70,000 pictures which the code used to generate the new images. After this we uploaded our picture and ran the program to see how it would modify it to make a new image related to yours. So I uploaded my picture and the first result I got was this.

I noticed that this was an outcome that didn't look like me that much, so I tried uploading another picture to see the outcome it gave.

This was even creepier than the first one. Actually the first time I uploaded this picture it identified one of my hair curls as a face. So I had to upload it again with the picture cropped to my face specifically. I don't know why but when my picture was uploaded the contour was blurred so this did something to the picture and looked for something similar. As you can see in the outcome all around her face is blurred.

We added a different network which gathered data from classic paintings giving the generated images a particular style. I uploaded again my portrait and mixed it with one of the seeds.

I also used the same seed and added the text variation "tropical forest" and this is what I got.

In this same way I logged into a website called Replicate which works similarly and generates images according to the text you input. This next image was done by inserting the words "Thriving nature".

I tried with a different one which I liked more. This one is called "Ocean revival"

This activity made me think about all the fake information we consume from the internet. It's so easy to make things up which look so real. You can fake so many things and deceive people with this. I guess this is one of the risks of new technology and the internet. We just need to learn how to use it better.

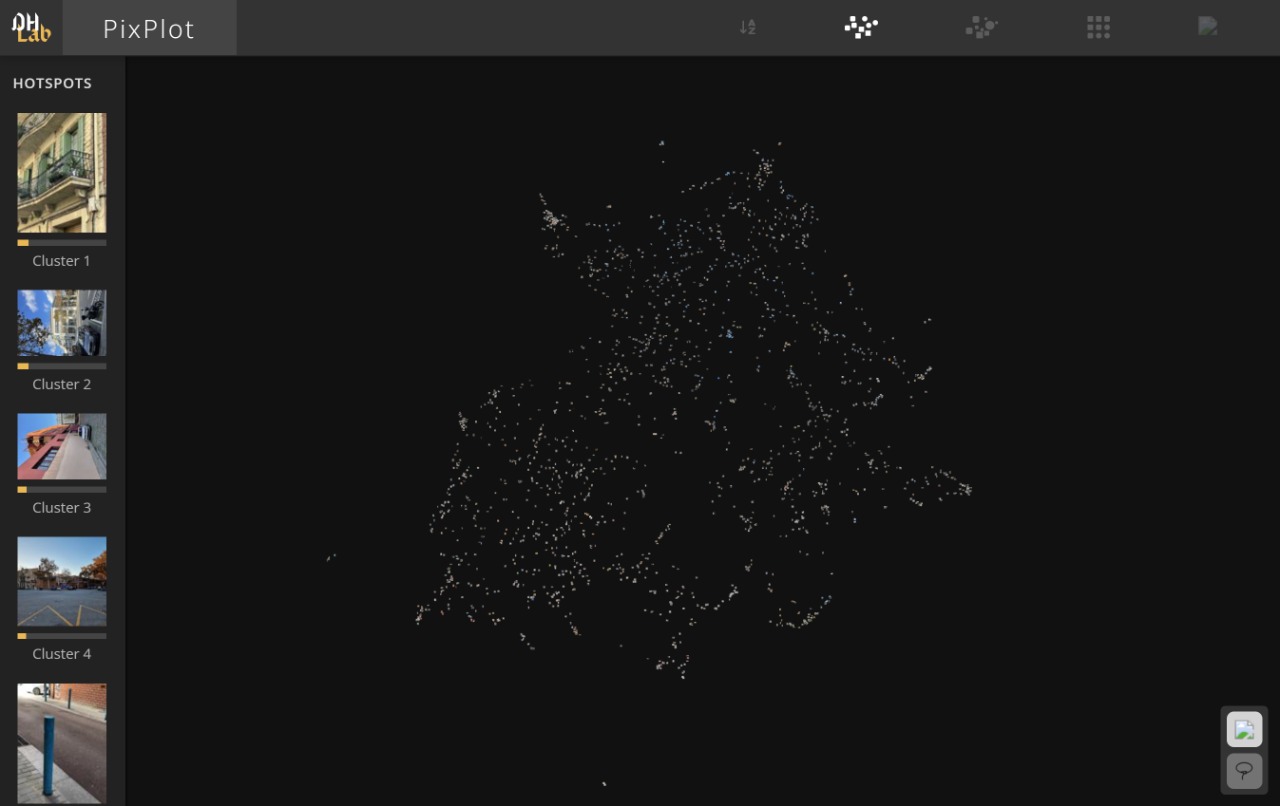

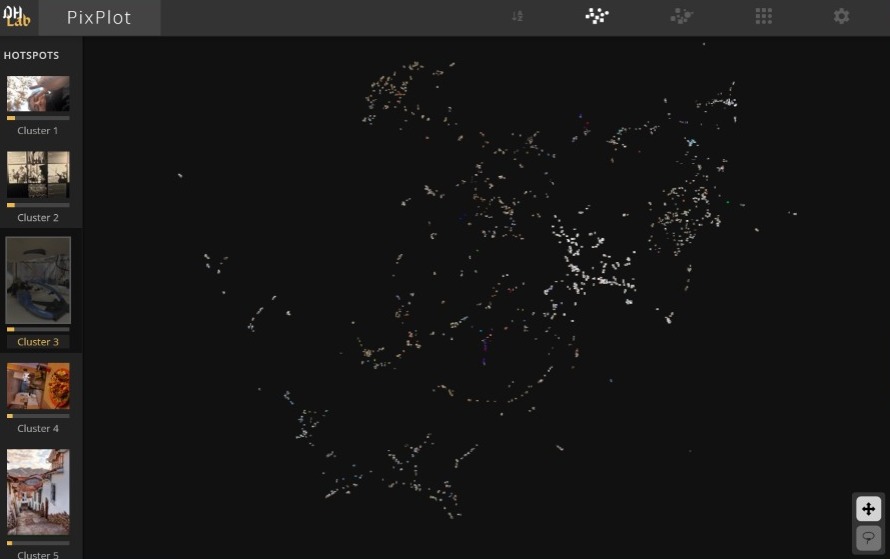

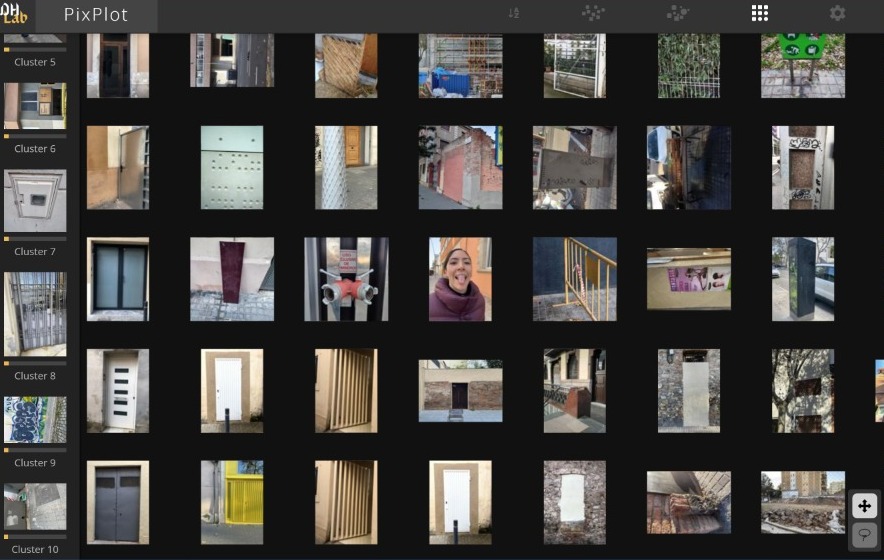

Another activity that we did was to recollect the last 100 pictures we had in our phones and to take 100 pictures of Poblenou representing its new and its old aspects. In this last pictures we were also looking for them to be heterogeneous so they could be matched together. Taller estampa recollected all of our pictures and added them to this site for the phone ones, and to this site for the Poblenou ones. You can see in the following image the map of how the pictures were clustered into categories.

Compillation from Poblenou

Compillation from Phones

As you can see, all of the pictures were categorized into clusters in order to organize them. In this way the program found similarities between this images and clustered them into groups. I found it quite funny that a picture of me sticking out my tounge was clustered next to pictures of doors. I guess the color scheme was just very similar to the door ones.

We developed a project with machine learning in which we decided to use a neural network to develop something speculative. We decided to try out the GPT3 network to develop some text. Our team came up with the idea of making an Ant Declaration of Rights based on the UN Declaration of Human Rights. So we got acces to the network and started playing a bit with the data input. We grabbed some articles we liked and set the machine to change it towards ants. Since the first inputs we got some really accurate articles defending the rights of ants. After we re inserted some of the articles it generated we got even better ones. What we found super interesting is that the machine realized we were talking about classifications in human society into groups like religion, race and nationality and adapted them to ant groups from colonies like worker, drone, queen. So the machine recognized we were classifying humans and set out to look for ant classifications. After a few more inputs it got to a point when it was writing articles about crime, interspecies court of justice, human liability, torture and other interesting topics. The machine popped a warning of sensitive content when this arose. We developed a website that you can visit if you want to read the full Declaration of Ant Rights made with the best bits generated by the network. You can also sign the petition and write your own article you think is missing from this declaration. I encourage you to read them all. They are very accurate and you wouldn't know at first instance that a machine actually wrote this. Which makes you wonder what things are human or machine written.

The second week we had class with Ramon and Lucas, they continued the topics of artificial intelligence but going a bit deeper into the ethics of artificial intelligence and again the basics we need to understand it. We developed a small speculative project in which we use artificial intelligence to create a physical object. We decided to approach the subject of Earthquakes, being it a big thing in both Chile and Mexico. We discussed about the main concerns and issues of Earthquakes which were the infrastructural damage, the personal trauma, the time frame considered to escape, the rush and stress of this life threatening situation, the lack of information and communication after the event and more things. What we decided to approach was the preparation for them. In this way we decided to create an object which would predict when the next earthquake would come and if it was life threatening or just harmless. So in a afun way it would display a countdown to Earthquake. Maybe with an Earthquake survival kit unlocking so you could escape safely.

The Machine Learning would work by inputting data from previous earthquakes that happened in Chile before so it can make a prediction. This would obviously not be precise or even correct but in a speculative way it could be useful to know when the next earthquake is going to hit and maybe plan some nice vacations away from the target place. You can check out our presentation in here.

This second week we had the Atlas of Weak Signals course, which analyzes emerging issues that can be assessed and in which way they can be approached. This included a trip to Collserolla.

Read More

Week 3 was the introduction to biology. We learnt about microorganisms all around us and the composition behind them from cells to systems.

Read More

During this first week we got to know each other and the master's program. We learned about our own personal present skills and the future ones we want to obtain as well as our classmate's ones.

Read More